So where can we use our Claude subscription then? - There’s been confusion about where we can actually use a Claude subscription. This comes after Anthropic took action to prevent third-party applications from spoofing the Claude Code harness to use Claude subscriptions.

[read more...]Posts tagged "development-tools"

January 12, 2026

August 3, 2025

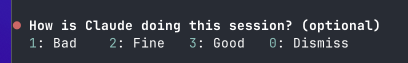

Claude Code's Feedback Survey - It seems this weekend a bunch of people have reported seeing Claude Code ask them for feedback on how well it is doing in their current session. It looks like this:

And everyone finds it annoying. I feel things like this are akin to advertising. For a paid product, feedback surveys like this should be opt in. Ask me at the start of the session if I’m ok in providing feedback. Give me the parameters of the feedback and let me opt in. Don’t pester me when I’m doing work.

I went digging in the code to see if maybe there is an undocumented setting I could slam into settings.json to hide this annoyance. What I found instead is an environment variable that switched it on more!

CLAUDE_FORCE_DISPLAY_SURVEY=1 will show that sucker lots!

These are the conditions that will show the survey:

- A minimum time before first feedback (600seconds / 10 minutes)

- A minimum time between feedback requests (1800 seconds / 30 minutes)

- A minimum number of user turns before showing feedback

- Some probability settings

- Some model restrictions (only shows for certain models) - I’ve only had it come up with Opus.

Asking for feedback is totally ok. But don’t interrupt my work session to do it. I hope this goes away or there is a setting added to opt out completely.

July 22, 2025

AI Coding Assistants: The $200 Monthly Investment That Pays for Itself - Lately the price of AI coding assistants has been climbing. Claude Code’s max plan runs $200 per month. Cursor’s Ultra tier is another $200. Even GitHub Copilot has crept up to $39/month. It’s easy to dismiss these as too expensive and move on.

But let’s do the math.

The Simple Economics

A mid-level developer in the US typically costs their employer around $100 per hour when you factor in salary, benefits, and overhead. At that rate, an AI coding assistant needs to save just 2 hours per month to pay for itself.

Two hours. That’s one debugging session cut short. One feature implemented faster. One refactoring completed smoothly instead of stretching into the evening.

I’ve been using these tools for the past year, and I can confidently say they save me 2 hours in a typical day, not month.

The Chicken and Egg Problem

Here’s the catch: how do you prove the value before you have the subscription? Your boss wants evidence, but you need the tool to generate that evidence.

This is a classic bootstrapping problem, but there are ways around it:

1. Start with Free Trials

Most AI coding assistants offer free trials or limited lower cost tiers. Use them strategically:

- Time yourself on similar tasks with and without the assistant

- Document specific examples where AI saved time

- Track error rates and bug fixes

2. Run a Pilot Program

Propose a 3-month pilot with clear success metrics:

- Reduced time to complete user stories

- Fewer bugs making it to production

- Increased test coverage

- Developer satisfaction scores

3. Use Personal Accounts for Proof of Concept

Yes, it’s an investment, but spending $200 of your own money for one month to demonstrate concrete value can be worth it. Track everything meticulously and present hard data.

[read more...]July 21, 2025

Context7: The Missing Link for AI-Powered Coding - If you’ve spent any time working with AI code assistants like Cursor or Claude, you’ve probably encountered this frustrating scenario: you ask about a specific library or framework, and the AI confidently provides outdated information or hallucinates methods that don’t exist. This happens because most LLMs are trained on data that’s months or even years old.

Enter Context7 – a clever solution to a problem that not many developers know about, but everyone experiences.

What is Context7?

Context7 is a documentation platform specifically designed for Large Language Models and AI code editors. It acts as a bridge between your AI coding assistant and up-to-date, version-specific documentation.

Instead of relying on an LLM’s training data (which might be outdated), Context7 pulls real-time documentation directly from the source. This means when you’re working with a specific version of a library, your AI assistant gets accurate, current information.

The Problem It Solves

AI coding assistants face several documentation challenges:

- Outdated Training Data: Most LLMs are trained on data that’s at least several months old, missing recent API changes and new features

- Hallucinated Examples: AIs sometimes generate plausible-sounding but incorrect code examples

- Version Mismatches: Generic documentation doesn’t account for the specific version you’re using

- Context Overload: Pasting entire documentation files into your prompt wastes tokens and confuses the model

I’ve personally wasted hours debugging code that looked correct but used deprecated methods or non-existent parameters. Context7 aims to eliminate this friction.

[read more...]