So where can we use our Claude subscription then? - There’s been confusion about where we can actually use a Claude subscription. This comes after Anthropic took action to prevent third-party applications from spoofing the Claude Code harness to use Claude subscriptions.

[read more...]January 12, 2026

January 2, 2026

A Month Exploring Fizzy - In their book Getting Real, 37signals talk about Open Doors — the idea that you should give customers access to their data through RSS feeds and APIs. Let them get their information when they want it, how they want it. Open up and good things happen.

Fizzy takes that seriously. When 37signals released Fizzy with its full git history available, they didn’t just open-source the code — they shipped a complete API and webhook system too. The doors were wide open baby!

So I dove in — reading the source, building tools, and sharing what I found. Every time curiosity kicked in, there was a direct path from “I wonder if…” to something I could actually try and execute. This post is a catch-all for my very bubbly month of December.

[read more...]December 10, 2025

Fizzy's Pull Requests: Who Built What and How - Sifting through Fizzy’s pull requests, it was fascinating to see how the 37signals team thinks through problems, builds solutions, and ships software together. Outside of their massive contributions to open source libraries, the podcast and the books — this was a chance to see up close, with real code, the application building process.

Below you will read which 37signals team members owned which domains in this project, and which PRs best demonstrate their craft. If you’re new to the codebase (or want to level up in specific areas), I will point you to the PRs worth studying. Each section highlights an engineer’s domain knowledge and the code reviews that showcase their thinking.

[read more...]December 8, 2025

Fizzy Design Evolution: A Flipbook from Git - After writing about the making of Fizzy told through git commits, I wanted to see the design evolution with my own eyes. Reading about “Let’s try bubbles” and “Rename bubbles => cards” is one thing. Watching the interface transform over 18 months is another.

So I got to work: I went through each day of commits in the Fizzy repository, got the application to a bootable state, seeded the database, and took a screenshot. Then I stitched those screenshots together into a flipbook-style video.

Here’s the final result - I hope you enjoy it! Read on below for details about the process and the backing music.

[read more...]December 3, 2025

Vanilla CSS is all you need - Back in April 2024, Jason Zimdars from 37signals published a post about modern CSS patterns in Campfire. He explained how their team builds sophisticated web applications using nothing but vanilla CSS. No Sass. No PostCSS. No build tools.

The post stuck with me. Over the past year and a half, 37signals has released two more products (Writebook and Fizzy) built on the same nobuild philosophy. I wanted to know if these patterns held up. Had they evolved?

[read more...]December 2, 2025

The Making of Fizzy, Told by Git - Today Fizzy was released and the entire source code of its development history is open for anyone to see. DHH announced on X that the full git history is available - a rare opportunity to peek behind the curtain of how a 37signals product comes together.

I cloned down the repository and prompted Claude Code:

“Can you go through the entire git history and write a documentary about the development of this application. What date the first commit was. Any major tweaks, changes and decisions and experiments. You can take multiple passes and use sub-agents to build up a picture. Make sure to cite commits for any interesting things. If there is anything dramatic then make sure to see if you can figure out decision making. Summarize at the end but the story should go into STORY.md”

It responded with:

“This is a fascinating task! Let me create a comprehensive investigation plan and use multiple agents to build up a complete picture of this project’s history.”

Here is the story of Fizzy - as interpreted by Claude - from the trail of git commits. Enjoy!

[read more...]Fizzy Webhooks: What You Need to Know - Fizzy is a new issue tracker (source available) from 37signals with a refreshingly clean UI. Beyond looking good, it ships with a solid webhook system for integrating with external services.

For most teams, webhooks are the bridge between the issues you track and the tools you already rely on. They let you push events into chat, incident tools, reporting pipelines, and anything else that speaks HTTP. If you are evaluating Fizzy or planning an integration, understanding what these webhooks can do will save you time.

I also put together a short PDF with the full payload structure and example code, which I link at the end of this post if you want to go deeper.

What could we build?

Here are a few ideas for things you could build on top of Fizzy’s events:

- A team metrics dashboard that tracks how long cards take to move from

card_publishedtocard_closedand which assignees or boards close issues the fastest. - Personal Slack or Teams digests that send each person a daily summary of cards they created, were assigned, or closed based on

card_published,card_assigned,card_unassigned, andcard_closedevents. - A churn detector that flags cards that bounce between columns or get sent back to triage repeatedly using

card_triaged,card_sent_back_to_triage, andcard_postponed. - A cross-board incident view that watches

card_board_changedto keep a separate dashboard of cards moving into your incident or escalation boards. - A comment activity stream that ships

comment_createdevents into a search index or knowledge base so you can search discussions across boards.

If you want to go deeper, you can also build more opinionated tools that surface insights and notify people who never log in to Fizzy:

- Stakeholder status reports that email non-technical stakeholders a weekly summary of key cards: what was created, closed, postponed, or sent back to triage on their projects. You can group by label, board, or assignee and generate charts or narrative summaries from

card_published,card_closed,card_postponed, andcard_sent_back_to_triageevents. - Capacity and load alerts that watch for people who are getting overloaded. For example, you could send a notification to a manager when someone is assigned more than N open cards, or when cards assigned to them sit in the same column for too long without a

card_triagedorcard_closedevent. - SLA and escalation notifications that integrate with PagerDuty or similar tools. When certain cards (for example, labeled “Incident” or on a specific board) are not closed within an agreed time window, you can trigger an alert or automatically move the card to an escalation board using

card_postponed,card_board_changed, andcard_closed. - Customer-facing status updates that keep clients in the loop without giving them direct access to Fizzy. You could generate per-customer email updates or a small status page based on events for cards tagged with that customer’s name, combining

card_published,card_closed, andcomment_createdto show progress and recent discussion. - Meeting prep packs that assemble the last week’s events for a given board into a concise agenda for standups or planning meetings. You can collate newly created cards, reopened work, and high-churn items from

card_published,card_reopened,card_triaged, andcard_sent_back_to_triage, then email the summary to attendees before the meeting.

Here is how to set it up.

[read more...]November 26, 2025

A Mermaid Validation Skill for Claude Code - AI coding agents generate significantly more markdown documentation than we used to write manually. This creates opportunities to explain concepts visually with mermaid diagrams - flowcharts, sequence diagrams, and other visualizations defined in text. When Claude generates these diagrams, the syntax can be invalid even though the code looks correct. Claude Code skills provide a way to teach Claude domain-specific workflows - in this case, validating diagrams before marking the work complete.

[read more...]August 23, 2025

First Impressions of Sonic (the stealth xAi Grok coding model?) -

Update (August 26, 2025): Sonic has been confirmed as the new Grok Code model and is now officially available today! What was once a “stealth” model is now xAi’s publicly released coding assistant.

A new stealth model called Sonic has quietly appeared in places like Opencode and Cursor, and it’s rumored to be xAi’s Grok coding model. I spent a full day working with it inside Opencode, replacing my usual Claude Sonnet 4 workflow — and came away impressed. The short version? It feels like Sonnet, but turbocharged.

Getting Access

Sonic showed up in Opencode’s model selector — no special invite required. Selecting it as the active model made it fully functional, with no setup beyond choosing it in the dropdown. During this stealth period it’s free to use and offers a massive 256,000 token context window. I didn’t encounter any rate limits or throttling all day.

First Test

Instead of a contrived “hello world,” I dropped Sonic into my real workflow. Where I’d usually use Sonnet 4, I asked Sonic to help me build a new Training Center CRUD feature in a Ruby on Rails application.

It generated the models, migrations, controllers, and views exactly as expected — and most importantly, it did it fast. Text streamed out so quickly it was hard to keep up. Compared to Claude Code, I’d estimate 3x faster tokens per second, consistently.

That was my wow moment: speed without sacrificing accuracy. It nailed the Rails conventions and felt like a drop-in Sonnet replacement.

My Standard Benchmark

My go-to evaluation prompt for coding models is: “Tell me about this codebase and what the most complex parts are.” On my legacy Rails codebase, Sonic immediately identified the correct overloaded model as the main complexity hotspot, and even suggested ways to refactor it.

The result was near identical in quality to what I’d get from Sonnet or even Opus — but again, the response flew back near-instantly. For day-to-day comprehension and reasoning, Sonic seems to match the best while dramatically cutting wait time.

Comparison to Claude Sonnet

- Reasoning & Accuracy: Nearly identical to Sonnet. No hallucinations, no weird Rails missteps.

- Personality: Very similar, though Sonic felt a bit more positive and agreeable to suggestions.

- Speed: The standout. At least 3x faster than Claude Code/Sonnet, with the speed holding steady across short and long generations.

- Where Sonnet Might Still Win: Hard to say yet — I didn’t hit any reasoning failures, but Sonnet have a longer track record of reliability under heavy workloads.

Bottom line: if you’re used to Sonnet, Sonic feels like the same experience at high speed.

Pricing and Availability

Right now Sonic is free to use in Opencode during its stealth rollout. No public pricing details are available yet, but if it undercuts Sonnet, it could be a winner.

I haven’t seen any rate limits or regional restrictions. Cursor users also report seeing Sonic available as a selectable model.

Initial Verdict

After a full day of work, I would — and did — ship production code with Sonic. It handled CRUD features, comprehension of a large legacy codebase, and various refactors without issue.

The real differentiator is speed. If Sonnet is your baseline, Sonic offers the same reasoning ability but with response times that feel instantaneous. Unless something changes in pricing or reliability, this could easily become my daily driver.

Quick Reference

- Access: Select “Sonic” in Opencode’s model selector (no invite required during stealth)

- Pricing: Free during stealth; official pricing TBD

- Best for: Day-to-day coding tasks, CRUD features, codebase comprehension, Rails development

- Avoid for: Nothing obvious yet — but keep human oversight on critical code

- API docs: Not yet public

- Hot take: As I posted on X, this feels like my Sonnet replacement should I be forced to not have Sonnet. @robzolkos

August 4, 2025

Two Days. Two Models. One Surprise: Claude Code Under Limits - The upcoming weekly usage limits announced by Anthropic for their Claude Code Max plans could put a dent in the workflows of many developers - especially those who’ve grown dependent on Opus-level output.

I’ve been using Claude Code for the last couple of months, though nowhere near the levels I’ve seen from the top 5% users (some of whom rack up thousands of dollars in usage per day). I don’t run multiple jobs concurrently (though I’ve experimented), and I don’t run it on Github itself. I don’t use git worktrees (as Anthropic recommends). I just focus on one task at a time and stay available to guide and assist my AI agent throughout the day.

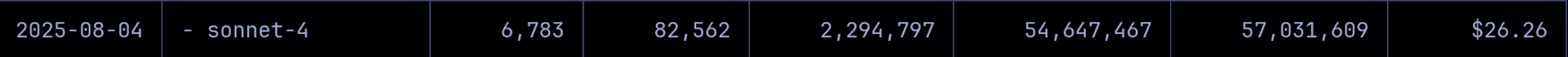

On Friday, I decided to spend the full day using Opus exclusively across my usual two or three work projects. Nothing unusual - a typical 8-hour day, bouncing between tasks. At the end of the day, I measured my token usage using the excellent ccussage utility and this calculated what it would have cost via the API.

Then today (Monday), I repeated the experiment - this time using Sonnet exclusively. Different tasks of course, but the same projects, similar complexity, and the same eight-hour block. Again I recorded the token usage.

Here’s what I found:

- Token usage was comparable.

- Sonnet’s cost was significantly lower (no surprise)

- And the quality? Honestly, surprisingly good.

Sonnet held up well for all of my coding tasks. I even used it for some light planning work and it got the job done (not as well as Opus would have but still very very good).

Anthropic’s new limits suggest we’ll get 240-480 hours/week of Sonnet, and 24-40 hours/week of Opus. Considering a full-time work week is 40 hours, and there are a total of 168 total hours in a week, I think the following setup might actually be sustainable for most developers:

- Sonnet for hands-on coding tasks

- Sonnet + Github for code review and analysis

- Opus for high-level planning, design, or complex architectural thinking

I highly recommend you be explicit about which model you want to use for your custom slash commands and sub-agents. For slash commands there is a model attribute you can put in the command front matter. Release 1.0.63 also allows setting the model in sub-agents.

I would love to see more transparency in the Claude Code UI of where we sit in real-time against our session and weekly limits. I think if developers saw this data they would control their usage to suit. We shouldn’t need 3rd party libraries to track and report this information.

Based on this pattern, I don’t think I’ll hit the new weekly limits. But we’ll see - I’ll report back in September. And of course there is nothing stopping you from trialing other providers and models and even other agentic coding tools and really diving deep into using the best model for the job.

August 3, 2025

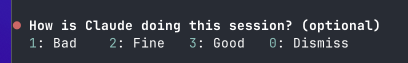

Claude Code's Feedback Survey - It seems this weekend a bunch of people have reported seeing Claude Code ask them for feedback on how well it is doing in their current session. It looks like this:

And everyone finds it annoying. I feel things like this are akin to advertising. For a paid product, feedback surveys like this should be opt in. Ask me at the start of the session if I’m ok in providing feedback. Give me the parameters of the feedback and let me opt in. Don’t pester me when I’m doing work.

I went digging in the code to see if maybe there is an undocumented setting I could slam into settings.json to hide this annoyance. What I found instead is an environment variable that switched it on more!

CLAUDE_FORCE_DISPLAY_SURVEY=1 will show that sucker lots!

These are the conditions that will show the survey:

- A minimum time before first feedback (600seconds / 10 minutes)

- A minimum time between feedback requests (1800 seconds / 30 minutes)

- A minimum number of user turns before showing feedback

- Some probability settings

- Some model restrictions (only shows for certain models) - I’ve only had it come up with Opus.

Asking for feedback is totally ok. But don’t interrupt my work session to do it. I hope this goes away or there is a setting added to opt out completely.