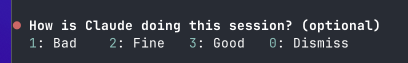

Claude Code's Feedback Survey - It seems this weekend a bunch of people have reported seeing Claude Code ask them for feedback on how well it is doing in their current session. It looks like this:

And everyone finds it annoying. I feel things like this are akin to advertising. For a paid product, feedback surveys like this should be opt in. Ask me at the start of the session if I’m ok in providing feedback. Give me the parameters of the feedback and let me opt in. Don’t pester me when I’m doing work.

I went digging in the code to see if maybe there is an undocumented setting I could slam into settings.json to hide this annoyance. What I found instead is an environment variable that switched it on more!

CLAUDE_FORCE_DISPLAY_SURVEY=1 will show that sucker lots!

These are the conditions that will show the survey:

- A minimum time before first feedback (600seconds / 10 minutes)

- A minimum time between feedback requests (1800 seconds / 30 minutes)

- A minimum number of user turns before showing feedback

- Some probability settings

- Some model restrictions (only shows for certain models) - I’ve only had it come up with Opus.

Asking for feedback is totally ok. But don’t interrupt my work session to do it. I hope this goes away or there is a setting added to opt out completely.